What’s Generative AI?

The lowdown on generative artificial intelligence (AI) that can make text, images, audio, and synthetic data. The recent hype is because users can now easily create top-notch text, graphics, and videos in seconds.

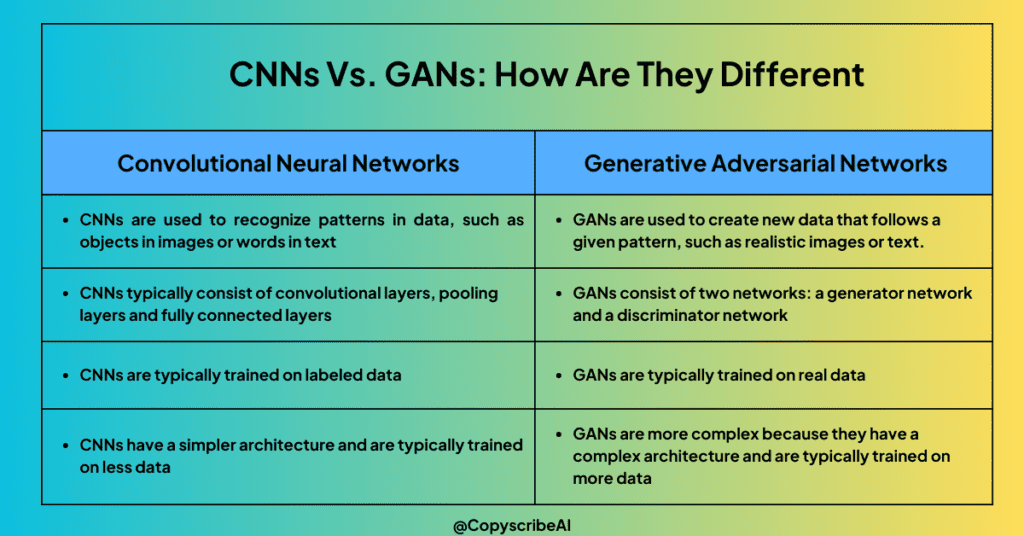

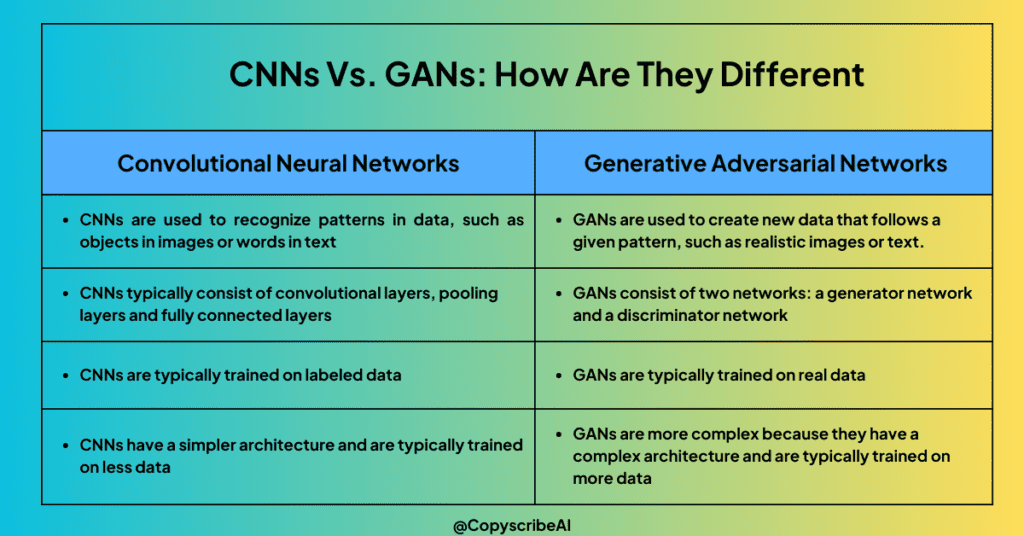

While the tech isn’t brand-new (dating back to the 1960s in chatbots), it took a leap in 2014 with generative adversarial networks (GANs), a machine learning algorithm. GANs enabled generative AI to craft highly realistic images, videos, and audio of actual people.

This new ability has opened doors for improved movie dubbing and enriched educational content. However, it has also raised concerns about deepfakes, digitally altered images or videos, and business cybersecurity threats, including deceptive requests that convincingly imitate a boss.

Two recent breakthroughs that significantly influenced the widespread adoption of generative AI will be discussed further: transformers and the breakthrough language models they made possible.

Transformers, a type of machine learning, allowed researchers to train increasingly larger models without the need to pre-label all the data. This enabled training new models on billions of text pages, resulting in more profound answers. Additionally, transformers introduced attention, allowing models to track connections between words across pages, chapters, and books rather than just within individual sentences. Transformers, leveraging their ability to track connections, could analyze code, proteins, chemicals, and DNA.

In the era of large language models, creating new AI content using tools like ChatGPT involves utilizing training data to achieve these advancements.

The fast progress in large language models (LLMs), with billions or even trillions of parameters, has ushered in a new digital world. In this era, generative AI models can produce engaging text, create realistic images, and even whip up entertaining sitcoms on the spot.

Furthermore, advancements in multimodal AI allow teams to create content in various media forms, such as text, graphics, and video. This forms the basis for tools like Dall-E, which automatically make images from text descriptions or generate text captions from images. The impact of generative AI systems and the foundation model is evident in these innovations.

Despite these advances, we’re in the early stages of employing generative AI, like Google AI Cloud’s Vertex AI, to produce understandable text and lifelike stylized graphics. Initial attempts faced problems with accuracy, bias, and generating strange responses.

Yet, the progress suggests that the intrinsic capabilities of generative AIs, particularly Vertex AI from Google Cloud, could revolutionize how businesses function. This technology might assist in coding, creating drugs, product development, reshaping business processes, and revolutionizing supply chains.

Generative AI History

In the 1960s, Joseph Weizenbaum created the Eliza chatbot, one of the earliest examples of generative AI. However, these early versions had limitations like a small vocabulary, no context understanding, and reliance on patterns, making them break easily. They also needed to be easier to customize.

Around 2010, advances in neural networks and deep learning brought a resurgence in generative AI. This technology could learn to understand and generate text, classify images, and transcribe audio automatically.

In 2014, Ian Goodfellow introduced GANs, a new way to create and evaluate content variations using competing neural networks. This led to the generation of realistic people, voices, music, and text. However, it also raised concerns about the potential misuse of generative AI to create lifelike deepfakes that mimic voices and people in videos.

Since then, advancements in various neural network techniques like VAEs, long short-term memory, transformers, diffusion models, and neural radiance fields (NeRF) have expanded the capabilities of generative AI.

How Does Generative AI Work?

Generative AI is an artificial intelligence technology that can produce multiple content types including written text and compelling imagery to captivating audio and cutting-edge synthetic data. Embrace the power of diverse mediums to inspire and motivate your audience.

It begins with a prompt, like text, images, videos, designs, or musical notes, that the AI system processes. Different AI algorithms generate new content based on the prompt, such as essays, problem solutions, or realistic fakes from pictures or audio.

Generative AI involves a complex process, like submitting data via an API. Developers had to use particular tools and write applications in languages like Python.

Now, pioneers in using generative AI to create are improving user experiences. You can describe your request in plain language. After an initial response, customize the results with feedback about style, tone, and other elements you want the generated content to reflect. This progress involves deep learning, AI applications, and generative pre-trained transformers.

Generative AI Models

Generative AI, using a mix of AI techniques, processes information. For instance, it uses natural language processing to create text to turn characters into sentences, parts of speech, entities, and actions—represented as vectors through encoding methods.

The process is similar for images, as they are transformed into visual elements represented as vectors. Recognizing that these techniques can also embed prejudices, discrimination, misinformation, and exaggeration within the training data is crucial. By tapping into the potential of generative AI, it is also possible to leverage a wide range of AI capabilities.

After developers choose a method to represent the world, they use a specific neural network to create fresh content when given a prompt. Approaches like GANs and VAEs, consisting of an encoder and a decoder, work well to produce lifelike human faces, synthetic data for AI training, or replicas of specific individuals.

Advancements in transformers, including Google’s BERT, OpenAI’s GPT, and Google AlphaFold, have led to neural networks that encode language, images, and proteins and can generate new content. The power of generative AI can also be seen in how these models implement generative AI for creating diverse content in response to queries or prompts.

What are Dall-E, ChatGPT and Bard?

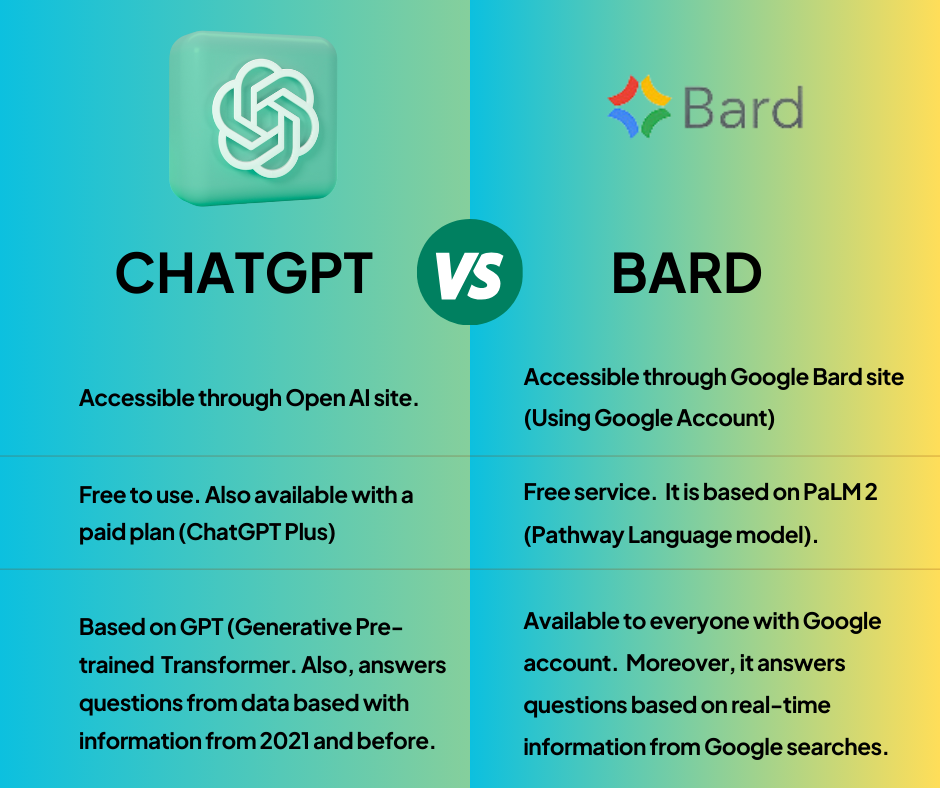

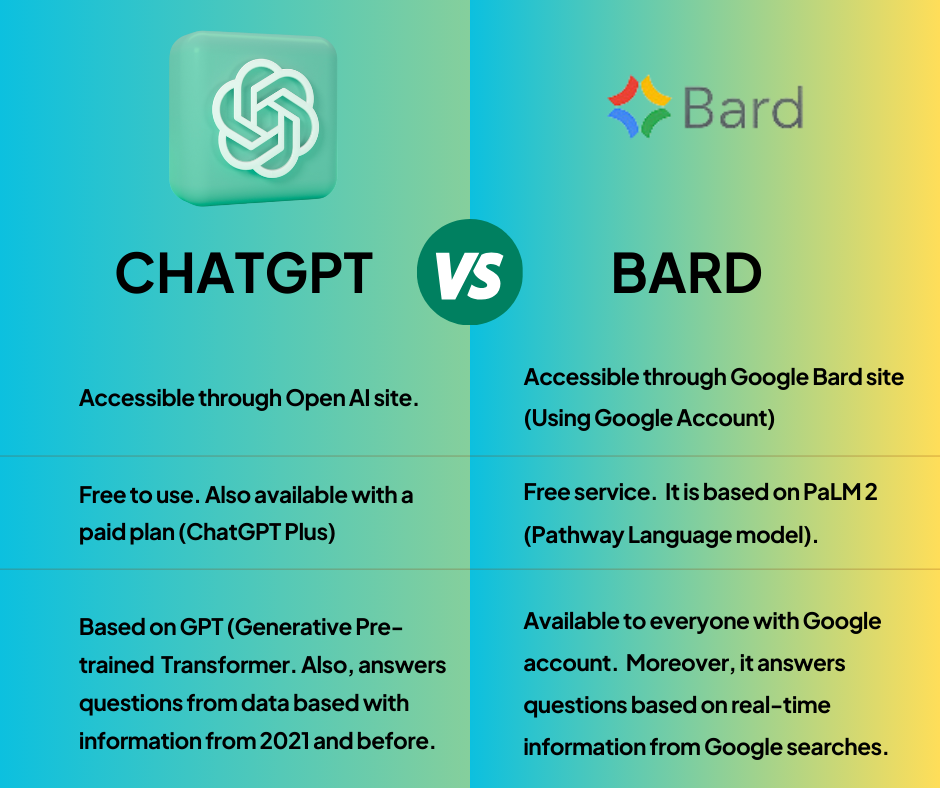

Bard, ChatGPT and Dall-E are well-known AI systems.

Dall-E: Dall-E is a smart AI that learns from many pictures and descriptions. It’s like a super-smart computer that understands words and images. It was made using OpenAI’s GPT in 2021. There’s also a better version called Dall-E 2 from 2022. With it, people can make all sorts of pictures by telling them what they want.

ChatGPT: ChatGPT, the popular chatbot introduced in November 2022, runs on OpenAI’s GPT-3.5. Unlike previous versions, GPT-4, launched on March 14, 2023, allows interactive fine-tuning through a chat interface with user feedback.

This is a departure from the earlier API-only access. ChatGPT stands out by considering its conversation history, creating a more lifelike interaction. Following the new GPT interface’s success, Microsoft invested heavily in OpenAI and integrated a GPT version into its Bing search engine.

BARD: Google and Microsoft pioneered advanced AI for understanding language and other content. Google shared some models with researchers but didn’t make them publicly accessible. When Microsoft integrated GPT into Bing, Google quickly launched its own AI chatbot, Google Bard, using a lighter version of its LaMDA language models.

However, Bard’s hasty debut caused Google’s stock to drop after wrongly claiming the Webb telescope discovered a planet in another solar system. Microsoft and ChatGPT also faced inaccuracies and erratic behaviour in their early versions.

Google later released a new Bard version, powered by its advanced LLM, PaLM 2, making Bard more efficient and visually responsive to user queries.

What Are the Use Cases for Generative AI?

Generative AI, a fascinating type of artificial intelligence, has garnered significant attention and is widely used to create diverse content across various applications. The buzz around generative AI is fueled by breakthroughs like GPT, which can be fine-tuned for specific uses. Here are some critical use cases for generative AI:

- Implementing Intelligent Chatbots: Generative AI is harnessed to design chatbots for customer service and technical support, enhancing user interaction and problem resolution.

- Creating Compelling Deepfakes: This technology generates deepfakes, allowing for the mimicry of individuals, which finds applications in entertainment and other creative endeavours.

- Enhancing Multilingual Dubbing: Generative AI improves dubbing in movies and educational content across different languages, ensuring a seamless and natural experience for diverse audiences.

- Automating Content Writing: It automates the writing process for various content types, including email responses, dating profiles, resumes, and even term papers, saving time and effort.

- Producing Photorealistic Art: Generative AI applications can create visually stunning and photorealistic art in specific styles, offering a new dimension to artistic expression.

- Optimizing Product Demonstration Videos: Businesses leverage generative AI to enhance product demonstration videos, providing a more engaging and informative experience for potential customers.

- Innovating Drug Compound Suggestions: Generative AI is employed to suggest new drug compounds for testing, aiding in the exploration of potential breakthroughs in the pharmaceutical industry.

- Architectural and Product Design: It designs physical products and buildings and optimizes chip designs, showcasing its versatility in various design and engineering fields.

- Musical Composition: Generative AI applications extend to the field of music, where it can be used to compose music in specific styles or tones, revolutionizing the creative process for musicians.

As generative AI continues to evolve and become more accessible through platforms like AI studios, its applications diversify, offering innovative solutions across different industries and creative domains.

What are the Benefits of Generative AI?

Generative AI has wide applications in various business areas. It helps understand and interpret content effortlessly and can automatically generate new content. Developers are looking into how generative AI can enhance current workflows, even considering completely adapting workflows to make the most of this technology.

Some advantages of using generative AI include:

- Automation of Content Creation: Generative AI streamlines the manual content writing process, saving time and resources for businesses.

- Efficient Email Responses: Implementing generative AI reduces the effort required in responding to emails, enabling quicker and more effective communication.

- Enhanced Technical Query Handling: Generative AI proves beneficial in addressing specific technical queries with improved accuracy and relevance, offering valuable support to users.

- Realistic Representations: Through generative AI, it becomes possible to build realistic representations of people, which can find applications in various fields such as virtual assistants, gaming, and simulations.

- Complex Information Summarization: Generative AI excels at summarizing intricate and complex information, transforming it into a coherent and understandable narrative. This aids in efficient knowledge dissemination.

- Adaptive Content Creation: By utilizing generative AI to design content, businesses can adapt and create content in a particular style, catering to diverse audience preferences and needs.

- Workflow Optimization: AI experts are exploring how generative AI can enhance existing workflows and even reshape them entirely. This technology offers the potential to revolutionize business processes, making them more efficient and responsive to emerging challenges.

In a recent survey by Gartner with over 2,500 executives, 38% mentioned that their main reason for investing in generative AI is to enhance customer experience and retention. Following closely are objectives like boosting revenue (26%), cutting costs (17%), and ensuring business continuity (7%).

Limitations of Generative AI

Early uses of generative AI highlight several limitations. The difficulties arise from the methods employed for specific tasks. For instance, a simplified summary of a complex subject is more readable than an explanation with multiple sources backing key points. However, the ease of reading the summary means users might need help to verify the information’s sources.

- Source Identification Challenge: Generative AI may struggle to consistently identify the source of content it generates, leading to potential issues in traceability and accountability.

- Bias Assessment Difficulty: Assessing the bias of sources can be challenging with generative AI, as it may inadvertently perpetuate biases present in the training data.

- Realism and Inaccuracy Identification: The realistic nature of generated content can make it more challenging for users to distinguish accurate information from inaccuracies, raising concerns about misinformation and reliability.

- Adaptability Issues: Tuning generative AI models for new circumstances or adapting them to evolving contexts can be complex, requiring a deep understanding of machine learning and model fine-tuning techniques.

- Overlooking Bias, Prejudice, and Hatred: The results produced by generative AI may inadvertently overlook or even amplify biases, prejudices, and hateful content in the training data, posing ethical concerns.

Generative AI, while a powerful tool for building innovative machine learning models and AI chatbots, comes with limitations that organizations and developers should carefully consider to ensure responsible and ethical use.

Attention is All You Need: Transformers Unleash a New Capacity

In 2017, Google introduced a groundbreaking neural network design called transformers, which significantly improved the efficiency and accuracy of tasks like understanding human language. These transformers use the concept of attention to mathematically describe how words or elements in data relate to and influence each other.

The key idea behind transformers was presented in a paper titled “Attention is all you need.” The researchers demonstrated that this new architecture outperformed other neural networks in tasks like translating between English and French. What’s impressive is that it achieved higher accuracy in just a quarter of the time needed for training.

Transformers are remarkable in their capacity to reveal intricate relationships and patterns in data that may be too intricate for humans to comprehend effortlessly. This revolutionary development has spurred rapid progress in transformer design, creating remarkable language models like GPT-3 and enhancing pre-training techniques such as Google’s BERT. These advancements constantly improve our capacity to process and comprehend language more efficiently.

What Are The Concerns Surrounding Generative AI?

The advent of generative AI has heralded a new era of possibilities in artificial intelligence and machine learning. However, this rapid evolution has also raised many concerns that need careful consideration.

Below are the prominent concerns associated with the current landscape of generative AI.

- Quality of Results

- Generative AI may produce inaccurate and misleading information, raising questions about the reliability of its outputs.

- Quality control becomes challenging, primarily when users rely on generative AI to build new AI capabilities.

- Trust and Source Provenance

- The lack of transparency in the generative AI process makes it easier to trust the generated content while understanding its source and provenance.

- Users may face challenges in discerning the authenticity and reliability of information produced by AI.

- Plagiarism Concerns

- The ease with which generative AI can create content raises concerns about new forms of plagiarism that disregard the rights of content creators and original artists.

- Safeguarding intellectual property becomes a critical issue in the context of AI-generated content.

- Disruption of Business Models

- Existing business models, particularly those built around search engine optimization and advertising, may face disruption due to the capabilities of generative AI.

- Adapting to these changes requires a reevaluation of strategies to maintain competitiveness.

- Propagation of Fake News

- The accessibility of generative AI tools makes it easier to generate fake news, amplifying concerns about the spread of misinformation.

- Distinguishing between authentic and AI-generated content becomes a challenge for consumers and authorities alike.

- Manipulation of Photographic Evidence

- Generative AI can create realistic fake images, casting doubt on the authenticity of photographic evidence.

- Instances where AI-generated content is mistaken for objective evidence may have significant societal and legal implications.

- Social Engineering Cyber Attacks

- The ability of generative AI to impersonate individuals raises the risk of more effective social engineering cyber attacks.

- Cybersecurity measures must adapt to the evolving threat landscape of AI-driven impersonation tactics.

In conclusion, while generative AI holds immense potential, addressing these concerns is crucial to ensure responsible and ethical deployment. Striking a balance between innovation and safeguarding against potential pitfalls is essential for the continued development and application of AI and machine learning technologies.

What Are Some Examples of Generative AI Tools?

Generative AI tools have become indispensable in various domains, enabling novel content creation across different modalities. These tools leverage advanced algorithms to build generative models, whether text, imagery, music, code, or voices. Below are some notable examples of generative AI tools categorized by their respective modalities:

Text Generation Tools

- GPT (Generative Pre-trained Transformer): Renowned for its language generation capabilities, GPT is a powerful tool that utilizes machine learning to create new and contextually relevant text.

- Jasper: Another text generation tool that harnesses generative AI to produce coherent and context-aware textual content.

- AI-Writer: A sophisticated writing assistant that leverages generative AI to help users craft high-quality and contextually appropriate text.

- Lex: A text generation tool designed to assist users in generating content by harnessing the power of generative AI.

Image Generation Tools

- Dall-E 2: An advanced image generation tool that employs generative AI to create diverse and imaginative images based on textual prompts.

- Midjourney: Known for its innovative approach to image generation, Midjourney utilizes generative AI to produce visually compelling and unique images.

- Stable Diffusion: A tool that leverages generative AI techniques for stable and controlled image synthesis, ensuring high-quality results.

Music Generation Tools

- Amper: A music generation tool that utilizes generative AI to compose original and personalized musical pieces.

- Dadabots: Known for its avant-garde approach, Dadabots explores generative AI to create experimental and unique music compositions.

- MuseNet: A music generation tool that enables users to use generative AI to produce diverse musical compositions.

Code Generation Tools

- CodeStarter: Empowering developers; CodeStarter employs generative AI to generate code snippets and solutions.

- Codex: A powerful code generation tool that leverages generative AI to understand context and provide accurate and relevant code suggestions.

- GitHub Copilot: Revolutionizing coding workflows, GitHub Copilot utilizes generative AI to help developers by suggesting code as they write.

Voice Synthesis Tools

- Descript: A voice synthesis tool that uses generative AI to provide realistic and natural-sounding voiceover capabilities.

- Listnr: Leveraging generative AI, Listnr enables the creation of synthetic voices for various applications.

- Podcast.ai: A tool that utilizes generative AI for voice synthesis, facilitating the generation of synthetic voices for podcasting and other audio applications.

These examples showcase the diverse applications of generative AI tools, demonstrating how businesses and individuals can use generative AI to create new and innovative content across different modalities.

Use Cases for Generative AI: By Industry

Generative AI technologies are often compared to game-changing inventions like steam power, electricity, and computing. They have the potential to impact various industries significantly.

It’s important to note that, similar to past transformative technologies, people might take some time to figure out the most effective ways to use generative AI. Unlike just making existing tasks faster, the goal is to find new and improved ways of doing things.

Here are some examples of how generative AI applications could impact different industries:

Finance

- Enhancing fraud detection systems by analyzing transactions in the context of an individual’s history.

Legal

- Designing and interpreting contracts using generative AI.

- Analyzing evidence and suggesting legal arguments.

Manufacturing

- Identifying defective parts and root causes through the integration of data from cameras, X-rays, and other metrics.

Film and Media

- It economically produces content and translates it into other languages with actors’ voices using generative AI.

Medical

- Efficiently identifying promising drug candidates through generative AI applications.

Architecture

- Designing and adapting prototypes more quickly with the help of generative AI for architectural firms.

Gaming

- Designing game content and levels using generative AI for gaming companies.

Ethics and Bias in Generative AI

Despite the potential benefits, the new generative AI tools raise concerns about accuracy, trustworthiness, bias, hallucination, and plagiarism. These ethical issues are not new to AI but may take years to address. An example is Microsoft’s 2016 chatbot, Tay, which had to be shut down due to spreading inflammatory content on Twitter.

What’s different now is that the latest generative AI apps may seem more coherent on the surface. However, sounding human-like and coherent doesn’t mean they possess human intelligence. There’s an ongoing debate about whether these AI models can be trained to have reasoning abilities. A Google engineer was even fired for stating publicly that the company’s generative AI app, Language Models for Dialog Applications (LaMDA), was aware.

The lifelike nature of generative AI content brings new challenges for AI safety. It becomes tough to detect AI-generated content and, more importantly, to identify errors. This becomes a significant issue when we use generative AI outcomes for tasks like coding or giving medical guidance.

Since many generative AI results need more transparency, checking for potential issues like copyright violations or problems with the original sources is challenging. Understanding how the AI reached a conclusion makes it easier to figure out why it might be incorrect.

Generative AI vs. AI

Generative AI is about making new and unique things like content, chat replies, designs, fake data, or even deepfakes. It’s helpful in creative areas and finding new solutions to problems because it can create various kinds of new stuff independently.

As mentioned earlier, Generative AI uses special techniques in its brain called neural networks, like transformers, GANs, and VAEs. On the other hand, different types of AI use different techniques, like convolutional neural networks, recurrent neural networks, and reinforcement learning.

Generative AI usually begins with a prompt, allowing users or data sources to give a starting query or data set to help generate content. This process can be repeated to explore different content options.

Conversely, traditional AI algorithms follow predefined rules to process data and provide results.

Each method has its pros and cons based on the problem at hand. Generative AI works well for tasks like NLP (Natural Language Processing) requiring new content creation. On the other hand, traditional algorithms are better for tasks involving rule-based processing and situations with predetermined outcomes.

Generative AI vs. Predictive AI vs. Conversational AI

Predictive AI relies on historical data patterns to predict outcomes, classify events, and offer helpful insights. This approach aids organizations in making informed decisions and crafting strategies based on data.

Conversational AI enables AI systems, such as virtual assistants and chatbots, to interact naturally with humans in conversation. It utilizes techniques from natural language processing (NLP) and machine learning to understand language and provide responses that feel human-like.

Best Practices for Using Generative AI

Indeed, there is no denial of Generative AI capabilities. However, incorporating generative AI into your workflow demands careful consideration of various factors to ensure optimal outcomes. Adhering to best practices can enhance accuracy, transparency, and ease of use. Below are essential guidelines to navigate the terrain of generative AI effectively:

- Transparent Labeling:

- Clearly label all generative AI content for users and consumers.

- Enhances user understanding and trust in AI-generated output.

- Accuracy Verification:

- Vet the accuracy of generated content by referencing primary sources.

- Validate results against reliable data to ensure precision and reliability.

- Bias Mitigation:

- Consider potential biases embedded in generative AI results.

- Implement measures to identify and rectify biases for fair and unbiased outcomes.

- Code and Content Quality Assurance:

- Double-check the quality of AI-generated code and content using supplementary tools.

- Ensures the reliability and efficiency of the generated output.

- Tool Proficiency:

- Learn the strengths and limitations of each generative AI tool.

- Tailor tool selection based on specific project requirements for optimal performance.

- Failure Mode Awareness:

- Familiarize yourself with standard failure modes in generative AI results.

- Develop strategies to address and mitigate potential shortcomings in the generated content.

By embracing these best practices, you can confidently navigate the dynamic landscape of generative AI, ensuring the ethical, accurate, and effective deployment of this powerful technology in your workflow.

The Future of Generative AI

ChatGPT’s remarkable depth and user-friendly interface have led to the widespread adoption of generative AI. While the quick embrace of generative AI applications has highlighted challenges in deploying the technology safely, it has also sparked research into better tools for identifying AI-generated text, images, and video.

Popular generative AI tools like ChatGPT, Midjourney, Stable Diffusion, and Bard have fueled a variety of training courses for all skill levels. Some aim to assist developers in creating AI applications, while others cater to business users seeking to apply the technology across their enterprises. Over time, industries and society will develop improved tools for tracking the origin of information, enhancing trust in AI.

Generative AI will keep advancing, making strides in translation, drug discovery, anomaly detection, and creating new content, spanning text, video, fashion design, and music. While these new tools are impressive, the real impact of generative AI will be felt when integrated directly into our existing tools.

Grammar checkers will improve, design tools will seamlessly offer more helpful suggestions, and training tools will automatically identify best practices within organizations, streamlining employee training. These changes are just a glimpse of how generative AI will transform our daily tasks in the short term.

Predicting the full future impact of generative AI is challenging. Yet, as we continue to use these tools to automate and enhance human tasks, we will inevitably need to reassess the nature and value of human expertise.

Latest Generative AI Technology Defined

TERMS | DEFINITION |

|---|---|

AI Art (Artificial Intelligence Art) | AI art refers to any digital art form created or enhanced through AI tools. This encompasses a wide range of creative expressions where artificial intelligence plays a role in the artistic process. |

AI Prompt | An AI prompt serves as a means of interaction between a human and a Large Language Model (LLM), allowing the model to generate the intended output. This interaction can take various forms, including questions, text inputs, code snippets, or examples, facilitating a dynamic and responsive engagement with AI models. |

AI Prompt Engineer | An AI prompt engineer is an expert specializing in crafting text-based cues that can be interpreted and understood by large language models and generative AI tools. This role involves skillfully designing prompts to elicit desired responses from AI systems. |

Amazon Bedrock | Amazon Bedrock, also known as AWS Bedrock, is a machine learning platform deployed on the Amazon Web Services cloud computing infrastructure. It is utilized for constructing generative AI applications, showcasing the integration of advanced machine learning capabilities within the AWS environment |

Auto-GPT | Auto-GPT represents an experimental, open-source autonomous AI agent based on the GPT-4 language model. This agent autonomously strings together tasks to accomplish overarching goals defined by the user, showcasing the potential of advanced AI models in autonomous decision-making. |

Google Search Generative Experience (SGE): | Google Search Generative Experience (SGE) integrates generative AI-powered results into Google search engine queries, providing a more enriched and interactive search experience for users seeking dynamic and context-aware responses.

|

Google Search Labs (GSE) | Google Search Labs (GSE) is an initiative from Alphabet’s Google division, offering new capabilities and experiments for Google Search in a preview format before they are publicly available. This allows users to explore cutting-edge features and functionalities in search before widespread implementation. |

Image-to-Image Translation | Image-to-image translation is a generative AI technique that transforms a source image into a target image while preserving specific visual properties of the original. This approach is instrumental in various applications, from style transfer to image enhancement.

|

Inception Score (IS) | The Inception Score (IS) is a mathematical algorithm employed to assess the quality of images generated by generative AI using a Generative Adversarial Network (GAN). The term “inception” here refers to the initial spark of creativity or the beginning of a thought or action.

|

LangChain | LangChain is an open-source framework enabling software developers in the AI and machine learning domain to integrate large language models with external components. This empowers developers to create applications powered by Large Language Models (LLMs). |

Q-learning | Q-learning is a machine-learning approach facilitating iterative learning and improvement over time. This method enables a model to take correct actions through trial and error, adapting and refining its behaviour based on outcomes. |

Reinforcement Learning from Human Feedback (RLHF) | RLHF combines reinforcement learning techniques, such as rewards and comparisons, with human guidance to train an AI agent. This approach leverages human input to enhance the learning process, enabling the model to adapt more effectively. |

Retrieval-Augmented Generation (RAG) | Retrieval-Augmented Generation (RAG) represents an AI framework that integrates external sources of knowledge to improve the quality of responses. This approach leverages retrieval mechanisms to enhance the generative capabilities of AI models. |

Variational Autoencoder (VAE) | A Variational Autoencoder is a generative AI algorithm employing deep learning to generate new content, identify anomalies, and remove noise. This versatile approach finds applications in content generation, anomaly detection, and noise reduction.

|

Generative AI FAQs

Generative AI traces its origins back to the 1960s when Joseph Weizenbaum created this technology’s first instance with the Eliza chatbot’s development. The field experienced significant advancements with Ian Goodfellow’s introduction of generative adversarial networks in 2014. The recent surge in generative AI’s popularity, exemplified by tools like ChatGPT, Google Bard, and Dall-E, can be attributed to ongoing research in large language models (LLMs) from organizations such as OpenAI and Google.

Generative AI can replace various jobs, including writing product descriptions, generating marketing copy, creating web content, initiating sales outreach, answering customer queries, and producing graphics for web pages. While some companies may seek to replace human roles with generative AI, others will likely leverage it to augment and enhance their workforce. The impact on employment will depend on how organizations integrate this technology into their operations.

Building a generative AI model involves encoding a representation of the desired output efficiently. For instance, in text generation, words can be represented as vectors that capture the similarity between frequently used words or words with similar meanings. Recent progress in LLM research has extended this process to images, sounds, proteins, DNA, drugs, and 3D designs. This allows generative AI models to represent diverse content types and iterate efficiently on variations, opening up possibilities for innovative applications.

Training a generative AI model entails customizing it for a specific use case. LLM advances, like the GPT model from OpenAI, offer a solid foundation for tailoring applications to different scenarios, such as writing text, generating code, or creating imagery. Training involves adjusting the model’s parameters for specific use cases and fine-tuning the results with relevant training data. This process ensures the generative AI model adapts to the nuances of different tasks, whether responding to customer queries in a call centre or generating images based on specific content and style labels.

Generative AI promises to transform creative work by assisting artists, industrial designers, and architects in exploring variations of ideas. Artists can start with a basic concept and explore diverse designs, while industrial designers and architects can experiment with product and building variations. The democratization of creative work is also possible, allowing business users to explore product marketing imagery through text descriptions and refine results with simple commands or suggestions.

The future of generative AI involves improving user experiences and workflows, building trust in generative AI results, and customization by companies for branding and communication. Integrating generative AI capabilities into various tools will streamline content generation workflows, increasing productivity and innovation. Beyond short-term goals, generative AI is poised to extend its reach into 3D modelling, product design, drug development, digital twins, supply chains, and business processes, facilitating the generation of new ideas and the exploration of diverse business concepts.

Generative models in natural language processing (NLP) have seen significant advancements, contributing to various applications. One prominent example is OpenAI’s GPT (Generative Pre-trained Transformer) series, including models like GPT-3. These models use transformer architectures, enabling them to understand and generate coherent text based on given prompts. Another noteworthy model is BERT (Bidirectional Encoder Representations from Transformers), which excels in understanding the context of words in a sentence. BERT’s bidirectional approach effectively captures dependencies and relationships between words. T5 (Text-To-Text Transfer Transformer) is a versatile model that frames NLP tasks as converting input text to target text, offering a unified approach for various applications, from summarization to translation.

Whether AI will attain consciousness remains a subject of philosophical and ethical debate. Currently, AI systems lack self-awareness and consciousness in the human sense. AI operates based on programmed algorithms and data inputs, displaying patterns and making predictions without genuine understanding. While AI can simulate human-like responses and behaviour, it lacks subjective experience and proper awareness. The prospect of AI gaining consciousness raises profound ethical considerations, including questions about autonomy, responsibility, and the potential impact on society. As technology advances, discussions about AI development’s ethical boundaries and implications will be crucial in shaping the future relationship between humans and intelligent machines.